In the quest to build more sophisticated and semantically aware search applications, engineers are increasingly turning towards integrating Large Language Models (LLMs) with the power of Retrieval-Augmented Generation (RAG) using vector databases like Elasticsearch, ChromaDB, and qdrant. Drawing upon our experience here, we can try to demystify the process, challenges, and benefits of such integration.

The Advent of Hybrid Search Technologies

The digital universe is expanding at an unprecedented rate, making the search for relevant information increasingly complex. Traditional keyword-based search methods, while effective to a point, often fall short when it comes to understanding the context and nuance of user queries. Enter vector search and LLMs, technologies that promise a deeper understanding of content and queries alike by considering the semantics of language rather than just the presence of specific words.

Elasticsearch, a distributed search and analytics engine built on Apache Lucene, has been at the forefront of this evolution. It supports high-performance searches across large datasets and is now increasingly being used as the foundation for integrating LLMs and RAG to enhance search functionalities further.

Integrating LLMs with Elasticsearch

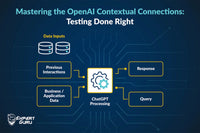

The integration process involves several key steps, beginning with the preparation of Elasticsearch to handle the vectorized data produced by LLMs. This entails setting up indices with dense vector fields to store document embeddings and utilizing Elasticsearch's KNN capabilities for vector similarity searching.

- Index Configuration: Define indices in Elasticsearch that include dense vector fields. These fields will store the vector representations of documents or text snippets, making them searchable in a way that accounts for semantic similarity.

- Data Ingestion: Populate your Elasticsearch index with pre-processed data. This involves generating vector embeddings for your documents using an LLM and indexing these vectors along with the documents.

- Query Processing: When a search query is received, it is first passed through an LLM to generate a query vector. Elasticsearch's KNN search is then used to find the most semantically similar documents based on these vector embeddings.

The Role of RAG in Enhancing Search Queries

Retrieval-Augmented Generation (RAG) brings an additional layer of sophistication to search applications by dynamically incorporating retrieved information into the response generation process. In this setup, Elasticsearch serves as the retrieval backbone, fetching relevant documents or text snippets that the LLM then uses to generate informed and contextually enriched responses.

This approach not only improves the accuracy of search results but also enables the application to provide answers that are synthesized from multiple sources, offering a comprehensive response to user queries that keyword searches alone could not achieve.

Challenges and Considerations

While the promise of integrating LLMs with Elasticsearch and leveraging RAG is compelling, it is not without challenges. These include ensuring the scalability of vector searches, managing the computational overhead of real-time vector generation for queries, and continuously updating the model and indices with new information to keep the search application relevant and accurate.

- Scalability and Performance: Vector searches, particularly over large datasets, can be resource-intensive. Engineers must carefully balance the size and dimensionality of vectors against search performance and accuracy.

- Model Selection and Updating: Choosing the right LLM for your application is crucial as is establishing a process for regularly updating the model and the indexed data to reflect new information and insights.

- User Query Understanding: The system must adeptly handle a wide range of user queries, translating them into vectors that accurately capture the user's search intent.

Conclusion

The integration of LLMs with Elasticsearch, particularly using RAG, represents a significant leap forward in search technology. It offers the promise of search results that are not only relevant but also richly contextual and semantically aware, providing users with insights and information that were previously beyond the reach of traditional search technologies. For engineers, navigating this landscape requires a blend of technical skills, strategic planning, and a deep understanding of the capabilities and limitations of the technologies involved. As these tools continue to evolve, so will the opportunities to create more intelligent, responsive, and useful search applications.