As we reflect on our success over the challenges of webhook management, a new challenge looms on the horizon: mastering the art of bulk updates in Elasticsearch. Imagine the rush of data, the sheer volume of updates cascading through the digital realm, threatening to disrupt the delicate balance of real-time integration. How do you ensure that every update is seamlessly reflected in your Elasticsearch database, maintaining data integrity and delivering fast search results to your users?

Setting the Scene: Understanding Elasticsearch's Role

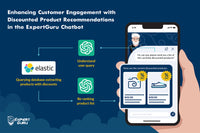

Before we dive into the complexities ahead, let's take a moment to understand the role of Elasticsearch in our ecosystem. Elasticsearch is a fast and scalable distributed RESTful search and analytics engine. It acts as a centralized repository, storing data crucial for lightning-fast search results, finely tuned relevancy, and powerful analytics. Within our ecosystem, ES powers the rapid retrieval of information for customers navigating Shopify stores via ExpertGuru. By indexing product details, policies, and pages, ES ensures that users can swiftly access relevant information, thereby enhancing their overall shopping experience.

Facing the Hurdles: Challenges in Bulk Updates

Yet, as we embark on this new journey, we encounter a fresh set of obstacles. While Elasticsearch offers immense potential for real-time data retrieval, managing bulk updates poses a unique set of challenges.

1. Volume and Velocity: As Shopify merchants navigate the ever-changing landscape of e-commerce, the need for real-time updates becomes paramount. The immense volume of updates flooding in from Shopify webhooks triggers updates for products, straining system performance and causing latency issues. As the number of updates increases, the processing time escalates, potentially impacting user experience and system stability.

2. OpenAI Rate Limits: Amidst the chaos, another enemy surfaces - the rate limits imposed by OpenAI. Integrating OpenAI for embeddings introduces complexity, with rate limits hindering real-time updates during peak activity. OpenAI's rate limits pose a challenge, particularly when multiple webhooks require embedding generation simultaneously.

3. Dynamic Information Management: Managing fluctuating prices, inventory levels, and product details in real time tests our ability to maintain data integrity. Ensuring that ES reflects the most up-to-date information becomes increasingly challenging when prices, inventory levels, and product details change.

Handling Bulk Updates in Elasticsearch with ExpertGuru: Strategies and Process Refinement

But with great volume comes great complexity and the challenge lies in efficiently handling this deluge of data. To navigate these challenges, we've refined our approach and streamlined our processes:

1. Targeted Updates: Who and What

Our journey begins with a careful selection process, identifying the targets for bulk updates: merchants with paid subscriptions on ExpertGuru. This approach aligns with the availability of the product recommendation feature, which is a premium service. This ensures the full benefit of our services, including timely and accurate product recommendations tailored to their store's inventory and customer queries.

Next, we focus on the specific data that has to be considered: products. Whether it's adding new products, updating existing ones, or removing deleted items, our goal is to reflect these changes swiftly and accurately in Elasticsearch.

2. Dynamic Storage and Aggregation

Harnessing the power of the PlanetScale MySQL database, we consolidate multiple webhook updates into a single update, reducing the load on Elasticsearch and minimizing the number of requests sent to the system. This optimized process not only enhances efficiency but also addresses challenges such as OpenAI rate limits and indexing process time.

For instance, consider the scenario where processing 1,000 products on Elasticsearch takes approximately 1 hour because we are utilizing OpenAI for generating embeddings for Elasticsearch. Without the SQL database, each webhook update would require individual indexing, leading to significant time overhead. However, by aggregating updates in SQL and updating Elasticsearch at the end of the day, we consolidate multiple updates into a single update, reducing indexing time and effectively managing OpenAI rate limits.

3. Minimizing Information Overload: Optimizing Embeddings

To optimize our bulk update process, we embrace the concept of proper embeddings by keeping only essential information in structured formats. This approach minimizes storage requirements and improves search performance, ensuring that our Elasticsearch database remains agile and responsive.

4. Efficient Inventory Cross-Referencing

Keeping inventory data in PlanetScale MySQL allows us to seamlessly cross-reference during product recommendations. By verifying product availability in real-time with SQL database, we enhance the user experience while maintaining computational efficiency.

5. Efficient Management Through Product Status Tracking

To coordinate updates smoothly, we use a dynamic status parameter that indicates the current state of each product within our SQL database. Each product is assigned a specific status based on its current state in the update process. This status dictates the actions taken during indexing, allowing us to prioritize and manage updates efficiently. Depending on this status parameter, products are categorized into different states such as updating, creating, deleting, or ready. Upon successful indexing, the status of each product is updated accordingly in SQL, maintaining data consistency and reliability. This systematic approach optimizes resource utilization and enhances the overall integrity of our database integration.

Conclusion

Elasticsearch serves as an essential part of our platform, empowering us to deliver unparalleled search experiences to users across Shopify stores with precision and expertise. Leveraging our robust backend infrastructure and efficient pipeline services, we were able to synchronize data between Elasticsearch and our PlanetScale MySQL database in a smooth and organized manner. From indexing new products to updating existing ones and removing outdated entries, every action is executed with finesse and care. This strategic approach ensures that our platform remains agile, responsive, and equipped to meet the evolving needs of our merchants and users.

As we continue to refine our processes and embrace innovative solutions, we remain committed to enhancing the overall user experience and driving success for our merchants.